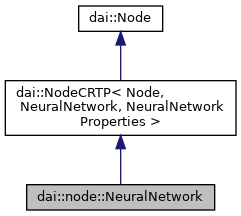

NeuralNetwork node. Runs a neural inference on input data. More...

#include <NeuralNetwork.hpp>

Public Member Functions | |

| int | getNumInferenceThreads () |

| NeuralNetwork (const std::shared_ptr< PipelineImpl > &par, int64_t nodeId) | |

| NeuralNetwork (const std::shared_ptr< PipelineImpl > &par, int64_t nodeId, std::unique_ptr< Properties > props) | |

| void | setBlob (const dai::Path &path) |

| void | setBlob (OpenVINO::Blob blob) |

| void | setBlobPath (const dai::Path &path) |

| void | setNumInferenceThreads (int numThreads) |

| void | setNumNCEPerInferenceThread (int numNCEPerThread) |

| void | setNumPoolFrames (int numFrames) |

Public Member Functions inherited from dai::NodeCRTP< Node, NeuralNetwork, NeuralNetworkProperties > Public Member Functions inherited from dai::NodeCRTP< Node, NeuralNetwork, NeuralNetworkProperties > | |

| std::unique_ptr< Node > | clone () const override |

| const char * | getName () const override |

Public Member Functions inherited from dai::Node Public Member Functions inherited from dai::Node | |

| virtual std::unique_ptr< Node > | clone () const =0 |

| Deep copy the node. More... | |

| AssetManager & | getAssetManager () |

| Get node AssetManager as a reference. More... | |

| const AssetManager & | getAssetManager () const |

| Get node AssetManager as a const reference. More... | |

| std::vector< Input * > | getInputRefs () |

| Retrieves reference to node inputs. More... | |

| std::vector< const Input * > | getInputRefs () const |

| Retrieves reference to node inputs. More... | |

| std::vector< Input > | getInputs () |

| Retrieves all nodes inputs. More... | |

| virtual const char * | getName () const =0 |

| Retrieves nodes name. More... | |

| std::vector< Output * > | getOutputRefs () |

| Retrieves reference to node outputs. More... | |

| std::vector< const Output * > | getOutputRefs () const |

| Retrieves reference to node outputs. More... | |

| std::vector< Output > | getOutputs () |

| Retrieves all nodes outputs. More... | |

| Pipeline | getParentPipeline () |

| const Pipeline | getParentPipeline () const |

| Node (const std::shared_ptr< PipelineImpl > &p, Id nodeId, std::unique_ptr< Properties > props) | |

| Constructs Node. More... | |

| virtual | ~Node ()=default |

Public Attributes | |

| Input | input {*this, "in", Input::Type::SReceiver, true, 5, true, {{DatatypeEnum::Buffer, true}}} |

| InputMap | inputs |

| Output | out {*this, "out", Output::Type::MSender, {{DatatypeEnum::NNData, false}}} |

| Output | passthrough {*this, "passthrough", Output::Type::MSender, {{DatatypeEnum::Buffer, true}}} |

| OutputMap | passthroughs |

Public Attributes inherited from dai::NodeCRTP< Node, NeuralNetwork, NeuralNetworkProperties > Public Attributes inherited from dai::NodeCRTP< Node, NeuralNetwork, NeuralNetworkProperties > | |

| Properties & | properties |

| Underlying properties. More... | |

Public Attributes inherited from dai::Node Public Attributes inherited from dai::Node | |

| const Id | id |

| Id of node. More... | |

| Properties & | properties |

Static Public Attributes | |

| constexpr static const char * | NAME = "NeuralNetwork" |

Protected Member Functions | |

| tl::optional< OpenVINO::Version > | getRequiredOpenVINOVersion () override |

Protected Member Functions inherited from dai::Node Protected Member Functions inherited from dai::Node | |

| virtual Properties & | getProperties () |

| void | setInputMapRefs (InputMap *inMapRef) |

| void | setInputMapRefs (std::initializer_list< InputMap * > l) |

| void | setInputRefs (Input *inRef) |

| void | setInputRefs (std::initializer_list< Input * > l) |

| void | setOutputMapRefs (OutputMap *outMapRef) |

| void | setOutputMapRefs (std::initializer_list< OutputMap * > l) |

| void | setOutputRefs (Output *outRef) |

| void | setOutputRefs (std::initializer_list< Output * > l) |

Protected Attributes | |

| tl::optional< OpenVINO::Version > | networkOpenvinoVersion |

Protected Attributes inherited from dai::Node Protected Attributes inherited from dai::Node | |

| AssetManager | assetManager |

| std::unordered_map< std::string, InputMap * > | inputMapRefs |

| std::unordered_map< std::string, Input * > | inputRefs |

| std::unordered_map< std::string, OutputMap * > | outputMapRefs |

| std::unordered_map< std::string, Output * > | outputRefs |

| std::weak_ptr< PipelineImpl > | parent |

| copyable_unique_ptr< Properties > | propertiesHolder |

Additional Inherited Members | |

Public Types inherited from dai::NodeCRTP< Node, NeuralNetwork, NeuralNetworkProperties > Public Types inherited from dai::NodeCRTP< Node, NeuralNetwork, NeuralNetworkProperties > | |

| using | Properties = NeuralNetworkProperties |

Public Types inherited from dai::Node Public Types inherited from dai::Node | |

| using | Id = std::int64_t |

| Node identificator. Unique for every node on a single Pipeline. More... | |

Detailed Description

NeuralNetwork node. Runs a neural inference on input data.

Definition at line 18 of file NeuralNetwork.hpp.

Constructor & Destructor Documentation

◆ NeuralNetwork() [1/2]

| NeuralNetwork::NeuralNetwork | ( | const std::shared_ptr< PipelineImpl > & | par, |

| int64_t | nodeId | ||

| ) |

Definition at line 9 of file NeuralNetwork.cpp.

◆ NeuralNetwork() [2/2]

| NeuralNetwork::NeuralNetwork | ( | const std::shared_ptr< PipelineImpl > & | par, |

| int64_t | nodeId, | ||

| std::unique_ptr< Properties > | props | ||

| ) |

Definition at line 11 of file NeuralNetwork.cpp.

Member Function Documentation

◆ getNumInferenceThreads()

| int NeuralNetwork::getNumInferenceThreads | ( | ) |

How many inference threads will be used to run the network

- Returns

- Number of threads, 0, 1 or 2. Zero means AUTO

Definition at line 53 of file NeuralNetwork.cpp.

◆ getRequiredOpenVINOVersion()

|

overrideprotectedvirtual |

Reimplemented from dai::Node.

Definition at line 21 of file NeuralNetwork.cpp.

◆ setBlob() [1/2]

| void NeuralNetwork::setBlob | ( | const dai::Path & | path | ) |

Same functionality as the setBlobPath(). Load network blob into assets and use once pipeline is started.

- Exceptions

-

Error if file doesn't exist or isn't a valid network blob.

- Parameters

-

path Path to network blob

Definition at line 30 of file NeuralNetwork.cpp.

◆ setBlob() [2/2]

| void NeuralNetwork::setBlob | ( | OpenVINO::Blob | blob | ) |

Load network blob into assets and use once pipeline is started.

- Parameters

-

blob Network blob

Definition at line 34 of file NeuralNetwork.cpp.

◆ setBlobPath()

| void NeuralNetwork::setBlobPath | ( | const dai::Path & | path | ) |

Load network blob into assets and use once pipeline is started.

- Exceptions

-

Error if file doesn't exist or isn't a valid network blob.

- Parameters

-

path Path to network blob

Definition at line 26 of file NeuralNetwork.cpp.

◆ setNumInferenceThreads()

| void NeuralNetwork::setNumInferenceThreads | ( | int | numThreads | ) |

How many threads should the node use to run the network.

- Parameters

-

numThreads Number of threads to dedicate to this node

Definition at line 45 of file NeuralNetwork.cpp.

◆ setNumNCEPerInferenceThread()

| void NeuralNetwork::setNumNCEPerInferenceThread | ( | int | numNCEPerThread | ) |

How many Neural Compute Engines should a single thread use for inference

- Parameters

-

numNCEPerThread Number of NCE per thread

Definition at line 49 of file NeuralNetwork.cpp.

◆ setNumPoolFrames()

| void NeuralNetwork::setNumPoolFrames | ( | int | numFrames | ) |

Specifies how many frames will be available in the pool

- Parameters

-

numFrames How many frames will pool have

Definition at line 41 of file NeuralNetwork.cpp.

Member Data Documentation

◆ input

| Input dai::node::NeuralNetwork::input {*this, "in", Input::Type::SReceiver, true, 5, true, {{DatatypeEnum::Buffer, true}}} |

Input message with data to be inferred upon Default queue is blocking with size 5

Definition at line 34 of file NeuralNetwork.hpp.

◆ inputs

| InputMap dai::node::NeuralNetwork::inputs |

Inputs mapped to network inputs. Useful for inferring from separate data sources Default input is non-blocking with queue size 1 and waits for messages

Definition at line 52 of file NeuralNetwork.hpp.

◆ NAME

|

staticconstexpr |

Definition at line 20 of file NeuralNetwork.hpp.

◆ networkOpenvinoVersion

|

protected |

Definition at line 24 of file NeuralNetwork.hpp.

◆ out

| Output dai::node::NeuralNetwork::out {*this, "out", Output::Type::MSender, {{DatatypeEnum::NNData, false}}} |

Outputs NNData message that carries inference results

Definition at line 39 of file NeuralNetwork.hpp.

◆ passthrough

| Output dai::node::NeuralNetwork::passthrough {*this, "passthrough", Output::Type::MSender, {{DatatypeEnum::Buffer, true}}} |

Passthrough message on which the inference was performed.

Suitable for when input queue is set to non-blocking behavior.

Definition at line 46 of file NeuralNetwork.hpp.

◆ passthroughs

| OutputMap dai::node::NeuralNetwork::passthroughs |

Passthroughs which correspond to specified input

Definition at line 57 of file NeuralNetwork.hpp.

The documentation for this class was generated from the following files: