#include "benchmark/benchmark.h"#include "benchmark_api_internal.h"#include "internal_macros.h"#include <sys/resource.h>#include <sys/time.h>#include <unistd.h>#include <algorithm>#include <atomic>#include <condition_variable>#include <cstdio>#include <cstdlib>#include <cstring>#include <fstream>#include <iostream>#include <memory>#include <thread>#include "check.h"#include "colorprint.h"#include "commandlineflags.h"#include "complexity.h"#include "counter.h"#include "log.h"#include "mutex.h"#include "re.h"#include "stat.h"#include "string_util.h"#include "sysinfo.h"#include "timers.h"

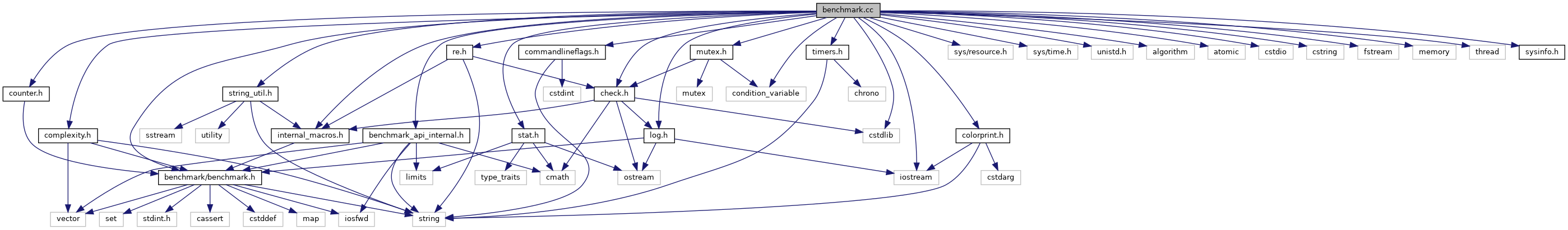

Include dependency graph for benchmark.cc:

Go to the source code of this file.

Classes | |

| struct | benchmark::internal::ThreadManager::Result |

| class | benchmark::internal::ThreadManager |

| class | benchmark::internal::ThreadTimer |

Namespaces | |

| benchmark | |

| benchmark::internal | |

Functions | |

| DEFINE_bool (benchmark_counters_tabular, false, "Whether to use tabular format when printing user counters to " "the console. Valid values: 'true'/'yes'/1, 'false'/'no'/0." "Defaults to false.") | |

| DEFINE_bool (benchmark_list_tests, false, "Print a list of benchmarks. This option overrides all other " "options.") | |

| DEFINE_bool (benchmark_report_aggregates_only, false, "Report the result of each benchmark repetitions. When 'true' is " "specified only the mean, standard deviation, and other statistics " "are reported for repeated benchmarks.") | |

| DEFINE_double (benchmark_min_time, 0.5, "Minimum number of seconds we should run benchmark before " "results are considered significant. For cpu-time based " "tests, this is the lower bound on the total cpu time " "used by all threads that make up the test. For real-time " "based tests, this is the lower bound on the elapsed time " "of the benchmark execution, regardless of number of " "threads.") | |

| DEFINE_int32 (benchmark_repetitions, 1, "The number of runs of each benchmark. If greater than 1, the " "mean and standard deviation of the runs will be reported.") | |

| DEFINE_int32 (v, 0, "The level of verbose logging to output") | |

| DEFINE_string (benchmark_color, "auto", "Whether to use colors in the output. Valid values: " "'true'/'yes'/1, 'false'/'no'/0, and 'auto'. 'auto' means to use " "colors if the output is being sent to a terminal and the TERM " "environment variable is set to a terminal type that supports " "colors.") | |

| DEFINE_string (benchmark_filter, ".", "A regular expression that specifies the set of benchmarks " "to execute. If this flag is empty, no benchmarks are run. " "If this flag is the string \"all\", all benchmarks linked " "into the process are run.") | |

| DEFINE_string (benchmark_format, "console", "The format to use for console output. Valid values are " "'console', 'json', or 'csv'.") | |

| DEFINE_string (benchmark_out, "", "The file to write additonal output to") | |

| DEFINE_string (benchmark_out_format, "json", "The format to use for file output. Valid values are " "'console', 'json', or 'csv'.") | |

| ConsoleReporter::OutputOptions | benchmark::internal::GetOutputOptions (bool force_no_color) |

| void | benchmark::Initialize (int *argc, char **argv) |

| int | benchmark::internal::InitializeStreams () |

| bool | benchmark::internal::IsZero (double n) |

| void | benchmark::internal::ParseCommandLineFlags (int *argc, char **argv) |

| void | benchmark::internal::PrintUsageAndExit () |

| bool | benchmark::ReportUnrecognizedArguments (int argc, char **argv) |

| size_t | benchmark::RunSpecifiedBenchmarks () |

| size_t | benchmark::RunSpecifiedBenchmarks (BenchmarkReporter *console_reporter) |

| size_t | benchmark::RunSpecifiedBenchmarks (BenchmarkReporter *console_reporter, BenchmarkReporter *file_reporter) |

| void | benchmark::internal::UseCharPointer (char const volatile *) |

Function Documentation

◆ DEFINE_bool() [1/3]

| DEFINE_bool | ( | benchmark_counters_tabular | , |

| false | , | ||

| "Whether to use tabular format when printing user counters to " "the console. Valid values: 'true'/'yes'/ | 1, | ||

| 'false'/'no'/0." "Defaults to false." | |||

| ) |

◆ DEFINE_bool() [2/3]

| DEFINE_bool | ( | benchmark_list_tests | , |

| false | , | ||

| "Print a list of benchmarks. This option overrides all other " "options." | |||

| ) |

◆ DEFINE_bool() [3/3]

| DEFINE_bool | ( | benchmark_report_aggregates_only | , |

| false | , | ||

| "Report the result of each benchmark repetitions. When 'true' is " "specified only the | mean, | ||

| standard | deviation, | ||

| and other statistics " "are reported for repeated benchmarks." | |||

| ) |

◆ DEFINE_double()

| DEFINE_double | ( | benchmark_min_time | , |

| 0. | 5, | ||

| "Minimum number of seconds we should run benchmark before " "results are considered significant. For cpu-time based " " | tests, | ||

| this is the lower bound on the total cpu time " "used by all threads that make up the test. For real-time " "based | tests, | ||

| this is the lower bound on the elapsed time " "of the benchmark | execution, | ||

| regardless of number of " "threads." | |||

| ) |

◆ DEFINE_int32() [1/2]

| DEFINE_int32 | ( | benchmark_repetitions | , |

| 1 | , | ||

| "The number of runs of each benchmark. If greater than | 1, | ||

| the " "mean and standard deviation of the runs will be reported." | |||

| ) |

◆ DEFINE_int32() [2/2]

◆ DEFINE_string() [1/5]

| DEFINE_string | ( | benchmark_color | , |

| "auto" | , | ||

| "Whether to use colors in the output. Valid values: " "'true'/'yes'/ | 1, | ||

| 'false'/'no'/ | 0, | ||

| and 'auto'. 'auto' means to use " "colors if the output is being sent to a terminal and the TERM " "environment variable is set to a terminal type that supports " "colors." | |||

| ) |

◆ DEFINE_string() [2/5]

| DEFINE_string | ( | benchmark_filter | , |

| "." | , | ||

| "A regular expression that specifies the set of benchmarks " "to execute. If this flag is | empty, | ||

| no benchmarks are run. " "If this flag is the string \"all\" | , | ||

| all benchmarks linked " "into the process are run." | |||

| ) |

◆ DEFINE_string() [3/5]

| DEFINE_string | ( | benchmark_format | , |

| "console" | , | ||

| "The format to use for console output. Valid values are " "'console' | , | ||

| 'json' | , | ||

| or 'csv'." | |||

| ) |

◆ DEFINE_string() [4/5]

| DEFINE_string | ( | benchmark_out | , |

| "" | , | ||

| "The file to write additonal output to" | |||

| ) |