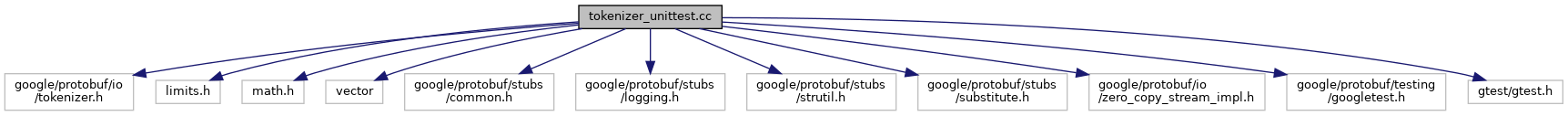

#include <google/protobuf/io/tokenizer.h>

#include <limits.h>

#include <math.h>

#include <vector>

#include <google/protobuf/stubs/common.h>

#include <google/protobuf/stubs/logging.h>

#include <google/protobuf/stubs/strutil.h>

#include <google/protobuf/stubs/substitute.h>

#include <google/protobuf/io/zero_copy_stream_impl.h>

#include <google/protobuf/testing/googletest.h>

#include <gtest/gtest.h>

Go to the source code of this file.

◆ TEST_1D

Value:

protected: \

template <typename CaseType> \

void DoSingleCase(const CaseType& CASES##_case); \

<< #CASES

" case #" <<

i <<

": " << CASES[

i]); \

DoSingleCase(CASES[

i]); \

} \

} \

\

template <typename CaseType> \

void

FIXTURE##

_##

NAME##_DD::DoSingleCase(

const CaseType& CASES##_case)

Definition at line 78 of file protobuf/src/google/protobuf/io/tokenizer_unittest.cc.

◆ TEST_2D

Value:

protected: \

template <typename CaseType1, typename CaseType2> \

void DoSingleCase(const CaseType1& CASES1##_case, \

const CaseType2& CASES2##_case); \

<< #CASES1

" case #" <<

i <<

": " << CASES1[

i] <<

", " \

<< #CASES2 " case #" << j << ": " << CASES2[j]); \

DoSingleCase(CASES1[

i], CASES2[j]); \

} \

} \

} \

\

template <typename CaseType1, typename CaseType2> \

void

FIXTURE##

_##

NAME##_DD::DoSingleCase(

const CaseType1& CASES1##_case, \

const CaseType2& CASES2##_case)

Definition at line 96 of file protobuf/src/google/protobuf/io/tokenizer_unittest.cc.

◆ array_stream_

| ArrayInputStream array_stream_ |

|

private |

◆ counter_

◆ detached_comments

| const char* detached_comments[10] |

◆ errors

◆ input

◆ next_leading_comments

| const char* next_leading_comments |

◆ output

| std::vector<Tokenizer::Token> output |

◆ prev_trailing_comments

| const char* prev_trailing_comments |

◆ recoverable

◆ text_

◆ type

| Tokenizer::TokenType type |