#include <MultiSenseTypes.hh>

Public Member Functions | |

| Header () | |

| virtual bool | inMask (DataSource mask) |

Public Attributes | |

| uint32_t | bitsPerPixel |

| uint32_t | exposure |

| int64_t | frameId |

| float | framesPerSecond |

| float | gain |

| uint32_t | height |

| const void * | imageDataP |

| uint32_t | imageLength |

| DataSource | source |

| uint32_t | timeMicroSeconds |

| uint32_t | timeSeconds |

| uint32_t | width |

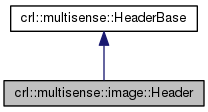

Detailed Description

Class containing image Header information common to all image types. This will be passed to any callback, of type image::Callback, that is subscribed to image data.

See crl::multisense::Channel::addIsolatedCallback for more details

Example code to extract 8 bit image data from a image header and display it using OpenCV (header.bitsPerPixel = 8)

{.cpp}

#include <iostream>

#include <stdexcept>

#include <signal.h>

#include <unistd.h>

#include <MultiSenseTypes.hh>

#include <MultiSenseChannel.hh>

//

// Note this example has only been tested under Linux

#include <opencv2/opencv.hpp>

volatile bool doneG = false;

void signalHandler(int sig)

{

std::cerr << "Shutting down on signal: " << strsignal(sig) << std::endl;

doneG = true;

}

class Camera

{

public:

Camera(crl::multisense::Channel* channel);

~Camera();

void imageCallback(const crl::multisense::image::Header& header);

private:

crl::multisense::Channel* m_channel;

};

namespace {

//

// Shim for the C-style callbacks accepted by

// crl::mulisense::Channel::addIsolatedCallback

void monoCallback(const crl::multisense::image::Header& header, void* userDataP)

{ reinterpret_cast<Camera*>(userDataP)->imageCallback(header); }

};

Camera::Camera(crl::multisense::Channel* channel):

m_channel(channel)

{

crl::multisense::Status status;

//

// Attach our monoCallback to our Channel instance. It will get

// called every time there is new Left Luma or Right luma image

// data.

status = m_channel->addIsolatedCallback(monoCallback,

crl::multisense::Source_Luma_Left | crl::multisense::Source_Luma_Right,

this);

//

// Check to see if the callback was successfully attached

if(crl::multisense::Status_Ok != status) {

throw std::runtime_error("Unable to attach isolated callback");

}

//

// Start streaming luma images for the left and right cameras.

m_channel->startStreams(crl::multisense::Source_Luma_Left | crl::multisense::Source_Luma_Right);

//

// Check to see if the streams were sucessfully started

if(crl::multisense::Status_Ok != status) {

throw std::runtime_error("Unable to start image streams");

}

}

Camera::~Camera()

{

crl::multisense::Status status;

//

// Remove our isolated callback.

status = m_channel->removeIsolatedCallback(monoCallback);

//

// Check to see if the callback was successfully removed

if(crl::multisense::Status_Ok != status) {

throw std::runtime_error("Unable to remove isolated callback");

}

//

// Stop streaming luma images for the left and right cameras

m_channel->stopStreams(crl::multisense::Source_Luma_Left | crl::multisense::Source_Luma_Right);

//

// Check to see if the image streams were successfully stopped

if(crl::multisense::Status_Ok != status) {

throw std::runtime_error("Unable to stop streams");

}

}

void Camera::imageCallback(const crl::multisense::image::Header& header)

{

//

// Create a container for the image data

std::vector<uint8_t> imageData;

imageData.resize(header.imageLength);

//

// Copy image data from the header's image data pointer to our

// image container

memcpy(&(imageData[0]), header.imageDataP, header.imageLength);

//

// Create a OpenCV matrix using our image container

cv::Mat_<uint8_t> imageMat(header.height, header.width, &(imageData[0]));

//

// Display the image using OpenCV

cv::namedWindow("Example");

cv::imshow("Example", imageMat);

cv::waitKey(1000./header.framesPerSecond);

}

int main()

{

//

// Setup a signal handler to kill the application

signal(SIGINT, signalHandler);

//

// Instantiate a channel connecting to a sensor at the factory default

// IP address

crl::multisense::Channel* channel;

channel = crl::multisense::Channel::Create("10.66.171.21");

channel->setMtu(7200);

try

{

Camera camera(channel);

while(!doneG)

{

usleep(100000);

}

}

catch(std::exception& e)

{

std::cerr << e.what() << std::endl;

}

//

// Destroy the channel instance

crl::multisense::Channel::Destroy(channel);

}

Definition at line 374 of file MultiSenseTypes.hh.

Constructor & Destructor Documentation

| crl::multisense::image::Header::Header | ( | ) | [inline] |

Default Constructor

Definition at line 406 of file MultiSenseTypes.hh.

Member Function Documentation

| virtual bool crl::multisense::image::Header::inMask | ( | DataSource | mask | ) | [inline, virtual] |

Member function used to determine if the data contained in the header is contained in a specific image mask

Reimplemented from crl::multisense::HeaderBase.

Definition at line 413 of file MultiSenseTypes.hh.

Member Data Documentation

Bits per pixel in the image

Definition at line 380 of file MultiSenseTypes.hh.

The image exposure time in microseconds

Definition at line 393 of file MultiSenseTypes.hh.

Unique ID used to describe an image. FrameIds increase sequentally from the device

Definition at line 386 of file MultiSenseTypes.hh.

The number of frames per second currently streaming from the device

Definition at line 397 of file MultiSenseTypes.hh.

The imager gain the image was captured with

Definition at line 395 of file MultiSenseTypes.hh.

Height of the image

Definition at line 384 of file MultiSenseTypes.hh.

| const void* crl::multisense::image::Header::imageDataP |

A pointer to the image data

Definition at line 401 of file MultiSenseTypes.hh.

The length of the image data stored in imageDataP

Definition at line 399 of file MultiSenseTypes.hh.

DataSource corresponding to imageDataP

Definition at line 378 of file MultiSenseTypes.hh.

The time microseconds value corresponding to when the image was captured

Definition at line 390 of file MultiSenseTypes.hh.

The time seconds value corresponding to when the image was captured

Definition at line 388 of file MultiSenseTypes.hh.

Width of the image

Definition at line 382 of file MultiSenseTypes.hh.

The documentation for this class was generated from the following file: