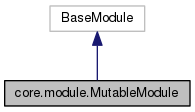

Inheritance diagram for core.module.MutableModule:

Public Member Functions | |

| def | __init__ |

| def | backward |

| def | bind |

| def | data_names |

| def | data_shapes |

| def | fit |

| def | forward |

| def | get_input_grads |

| def | get_outputs |

| def | get_params |

| def | init_optimizer |

| def | init_params |

| def | install_monitor |

| def | label_shapes |

| def | output_names |

| def | output_shapes |

| def | save_checkpoint |

| def | update |

| def | update_metric |

Public Attributes | |

| binded | |

| for_training | |

| inputs_need_grad | |

| optimizer_initialized | |

| params_initialized | |

Private Member Functions | |

| def | _reset_bind |

Private Attributes | |

| _context | |

| _curr_module | |

| _data_names | |

| _fixed_param_names | |

| _fixed_param_prefix | |

| _label_names | |

| _max_data_shapes | |

| _max_label_shapes | |

| _preload_opt_states | |

| _symbol | |

| _work_load_list | |

Detailed Description

A mutable module is a module that supports variable input data. Parameters ---------- symbol : Symbol data_names : list of str label_names : list of str logger : Logger context : Context or list of Context work_load_list : list of number max_data_shapes : list of (name, shape) tuple, designating inputs whose shape vary max_label_shapes : list of (name, shape) tuple, designating inputs whose shape vary fixed_param_prefix : list of str, indicating fixed parameters

Constructor & Destructor Documentation

| def core.module.MutableModule.__init__ | ( | self, | |

| symbol, | |||

| data_names, | |||

| label_names, | |||

logger = logging, |

|||

context = ctx.cpu(), |

|||

work_load_list = None, |

|||

max_data_shapes = None, |

|||

max_label_shapes = None, |

|||

fixed_param_prefix = None |

|||

| ) |

Member Function Documentation

| def core.module.MutableModule._reset_bind | ( | self | ) | [private] |

| def core.module.MutableModule.backward | ( | self, | |

out_grads = None |

|||

| ) |

| def core.module.MutableModule.bind | ( | self, | |

| data_shapes, | |||

label_shapes = None, |

|||

for_training = True, |

|||

inputs_need_grad = False, |

|||

force_rebind = False, |

|||

shared_module = None, |

|||

grad_req = 'write' |

|||

| ) |

| def core.module.MutableModule.data_names | ( | self | ) |

| def core.module.MutableModule.data_shapes | ( | self | ) |

| def core.module.MutableModule.fit | ( | self, | |

| train_data, | |||

eval_data = None, |

|||

eval_metric = 'acc', |

|||

epoch_end_callback = None, |

|||

batch_end_callback = None, |

|||

kvstore = 'local', |

|||

optimizer = 'sgd', |

|||

optimizer_params = (('learning_rate', 0.01), |

|||

eval_end_callback = None, |

|||

eval_batch_end_callback = None, |

|||

initializer = Uniform(0.01), |

|||

arg_params = None, |

|||

aux_params = None, |

|||

allow_missing = False, |

|||

force_rebind = False, |

|||

force_init = False, |

|||

begin_epoch = 0, |

|||

num_epoch = None, |

|||

validation_metric = None, |

|||

monitor = None, |

|||

prefix = None, |

|||

state = None |

|||

| ) |

Train the module parameters.

Parameters

----------

train_data : DataIter

eval_data : DataIter

If not `None`, will be used as validation set and evaluate the performance

after each epoch.

eval_metric : str or EvalMetric

Default `'acc'`. The performance measure used to display during training.

epoch_end_callback : function or list of function

Each callback will be called with the current `epoch`, `symbol`, `arg_params`

and `aux_params`.

batch_end_callback : function or list of function

Each callback will be called with a `BatchEndParam`.

kvstore : str or KVStore

Default `'local'`.

optimizer : str or Optimizer

Default `'sgd'`

optimizer_params : dict

Default `(('learning_rate', 0.01),)`. The parameters for the optimizer constructor.

The default value is not a `dict`, just to avoid pylint warning on dangerous

default values.

eval_end_callback : function or list of function

These will be called at the end of each full evaluation, with the metrics over

the entire evaluation set.

eval_batch_end_callback : function or list of function

These will be called at the end of each minibatch during evaluation

initializer : Initializer

Will be called to initialize the module parameters if not already initialized.

arg_params : dict

Default `None`, if not `None`, should be existing parameters from a trained

model or loaded from a checkpoint (previously saved model). In this case,

the value here will be used to initialize the module parameters, unless they

are already initialized by the user via a call to `init_params` or `fit`.

`arg_params` has higher priority to `initializer`.

aux_params : dict

Default `None`. Similar to `arg_params`, except for auxiliary states.

allow_missing : bool

Default `False`. Indicate whether we allow missing parameters when `arg_params`

and `aux_params` are not `None`. If this is `True`, then the missing parameters

will be initialized via the `initializer`.

force_rebind : bool

Default `False`. Whether to force rebinding the executors if already binded.

force_init : bool

Default `False`. Indicate whether we should force initialization even if the

parameters are already initialized.

begin_epoch : int

Default `0`. Indicate the starting epoch. Usually, if we are resuming from a

checkpoint saved at a previous training phase at epoch N, then we should specify

this value as N+1.

num_epoch : int

Number of epochs to run training.

Examples

--------

An example of using fit for training::

>>> #Assume training dataIter and validation dataIter are ready

>>> mod.fit(train_data=train_dataiter, eval_data=val_dataiter,

optimizer_params={'learning_rate':0.01, 'momentum': 0.9},

num_epoch=10)

| def core.module.MutableModule.forward | ( | self, | |

| data_batch, | |||

is_train = None |

|||

| ) |

| def core.module.MutableModule.get_input_grads | ( | self, | |

merge_multi_context = True |

|||

| ) |

| def core.module.MutableModule.get_outputs | ( | self, | |

merge_multi_context = True |

|||

| ) |

| def core.module.MutableModule.get_params | ( | self | ) |

| def core.module.MutableModule.init_optimizer | ( | self, | |

kvstore = 'local', |

|||

optimizer = 'sgd', |

|||

optimizer_params = (('learning_rate', 0.01), |

|||

force_init = False |

|||

| ) |

| def core.module.MutableModule.init_params | ( | self, | |

initializer = Uniform(0.01), |

|||

arg_params = None, |

|||

aux_params = None, |

|||

allow_missing = False, |

|||

force_init = False, |

|||

allow_extra = False |

|||

| ) |

| def core.module.MutableModule.install_monitor | ( | self, | |

| mon | |||

| ) |

| def core.module.MutableModule.label_shapes | ( | self | ) |

| def core.module.MutableModule.output_names | ( | self | ) |

| def core.module.MutableModule.output_shapes | ( | self | ) |

| def core.module.MutableModule.save_checkpoint | ( | self, | |

| prefix, | |||

| epoch, | |||

save_optimizer_states = False |

|||

| ) |

Save current progress to checkpoint.

Use mx.callback.module_checkpoint as epoch_end_callback to save during training.

Parameters

----------

prefix : str

The file prefix to checkpoint to

epoch : int

The current epoch number

save_optimizer_states : bool

Whether to save optimizer states for continue training

| def core.module.MutableModule.update | ( | self | ) |

| def core.module.MutableModule.update_metric | ( | self, | |

| eval_metric, | |||

| labels | |||

| ) |

Member Data Documentation

core::module.MutableModule::_context [private] |

core::module.MutableModule::_curr_module [private] |

core::module.MutableModule::_data_names [private] |

core::module.MutableModule::_label_names [private] |

core::module.MutableModule::_symbol [private] |

The documentation for this class was generated from the following file: