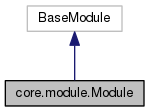

Inheritance diagram for core.module.Module:

Public Member Functions | |

| def | __init__ |

| def | backward |

| def | bind |

| def | borrow_optimizer |

| def | data_names |

| def | data_shapes |

| def | forward |

| def | get_input_grads |

| def | get_outputs |

| def | get_params |

| def | get_states |

| def | init_optimizer |

| def | init_params |

| def | install_monitor |

| def | label_names |

| def | label_shapes |

| def | load_optimizer_states |

| def | output_names |

| def | output_shapes |

| def | reshape |

| def | save_checkpoint |

| def | save_optimizer_states |

| def | set_params |

| def | set_states |

| def | update |

| def | update_metric |

Static Public Member Functions | |

| def | load |

Public Attributes | |

| binded | |

| for_training | |

| inputs_need_grad | |

| optimizer_initialized | |

| params_initialized | |

Private Member Functions | |

| def | _reset_bind |

| def | _sync_params_from_devices |

Private Attributes | |

| _arg_params | |

| _aux_names | |

| _aux_params | |

| _context | |

| _data_names | |

| _data_shapes | |

| _exec_group | |

| _fixed_param_names | |

| _grad_req | |

| _kvstore | |

| _label_names | |

| _label_shapes | |

| _optimizer | |

| _output_names | |

| _param_names | |

| _params_dirty | |

| _preload_opt_states | |

| _state_names | |

| _symbol | |

| _update_on_kvstore | |

| _updater | |

| _work_load_list | |

Detailed Description

Module is a basic module that wrap a `Symbol`. It is functionally the same

as the `FeedForward` model, except under the module API.

Parameters

----------

symbol : Symbol

data_names : list of str

Default is `('data')` for a typical model used in image classification.

label_names : list of str

Default is `('softmax_label')` for a typical model used in image

classification.

logger : Logger

Default is `logging`.

context : Context or list of Context

Default is `cpu()`.

work_load_list : list of number

Default `None`, indicating uniform workload.

fixed_param_names: list of str

Default `None`, indicating no network parameters are fixed.

state_names : list of str

states are similar to data and label, but not provided by data iterator.

Instead they are initialized to 0 and can be set by set_states()

Constructor & Destructor Documentation

| def core.module.Module.__init__ | ( | self, | |

| symbol, | |||

data_names = ('data',, |

|||

label_names = ('softmax_label',, |

|||

logger = logging, |

|||

context = ctx.cpu(), |

|||

work_load_list = None, |

|||

fixed_param_names = None, |

|||

state_names = None |

|||

| ) |

Member Function Documentation

| def core.module.Module._reset_bind | ( | self | ) | [private] |

| def core.module.Module._sync_params_from_devices | ( | self | ) | [private] |

| def core.module.Module.backward | ( | self, | |

out_grads = None |

|||

| ) |

| def core.module.Module.bind | ( | self, | |

| data_shapes, | |||

label_shapes = None, |

|||

for_training = True, |

|||

inputs_need_grad = False, |

|||

force_rebind = False, |

|||

shared_module = None, |

|||

grad_req = 'write' |

|||

| ) |

Bind the symbols to construct executors. This is necessary before one

can perform computation with the module.

Parameters

----------

data_shapes : list of (str, tuple)

Typically is `data_iter.provide_data`.

label_shapes : list of (str, tuple)

Typically is `data_iter.provide_label`.

for_training : bool

Default is `True`. Whether the executors should be bind for training.

inputs_need_grad : bool

Default is `False`. Whether the gradients to the input data need to be computed.

Typically this is not needed. But this might be needed when implementing composition

of modules.

force_rebind : bool

Default is `False`. This function does nothing if the executors are already

binded. But with this `True`, the executors will be forced to rebind.

shared_module : Module

Default is `None`. This is used in bucketing. When not `None`, the shared module

essentially corresponds to a different bucket -- a module with different symbol

but with the same sets of parameters (e.g. unrolled RNNs with different lengths).

| def core.module.Module.borrow_optimizer | ( | self, | |

| shared_module | |||

| ) |

| def core.module.Module.data_names | ( | self | ) |

| def core.module.Module.data_shapes | ( | self | ) |

| def core.module.Module.forward | ( | self, | |

| data_batch, | |||

is_train = None |

|||

| ) |

| def core.module.Module.get_input_grads | ( | self, | |

merge_multi_context = True |

|||

| ) |

Get the gradients with respect to the inputs of the module.

Parameters

----------

merge_multi_context : bool

Default is `True`. In the case when data-parallelism is used, the outputs

will be collected from multiple devices. A `True` value indicate that we

should merge the collected results so that they look like from a single

executor.

Returns

-------

If `merge_multi_context` is `True`, it is like `[grad1, grad2]`. Otherwise, it

is like `[[grad1_dev1, grad1_dev2], [grad2_dev1, grad2_dev2]]`. All the output

elements are `NDArray`.

| def core.module.Module.get_outputs | ( | self, | |

merge_multi_context = True |

|||

| ) |

Get outputs of the previous forward computation.

Parameters

----------

merge_multi_context : bool

Default is `True`. In the case when data-parallelism is used, the outputs

will be collected from multiple devices. A `True` value indicate that we

should merge the collected results so that they look like from a single

executor.

Returns

-------

If `merge_multi_context` is `True`, it is like `[out1, out2]`. Otherwise, it

is like `[[out1_dev1, out1_dev2], [out2_dev1, out2_dev2]]`. All the output

elements are `NDArray`.

| def core.module.Module.get_params | ( | self | ) |

| def core.module.Module.get_states | ( | self, | |

merge_multi_context = True |

|||

| ) |

Get states from all devices

Parameters

----------

merge_multi_context : bool

Default is `True`. In the case when data-parallelism is used, the states

will be collected from multiple devices. A `True` value indicate that we

should merge the collected results so that they look like from a single

executor.

Returns

-------

If `merge_multi_context` is `True`, it is like `[out1, out2]`. Otherwise, it

is like `[[out1_dev1, out1_dev2], [out2_dev1, out2_dev2]]`. All the output

elements are `NDArray`.

| def core.module.Module.init_optimizer | ( | self, | |

kvstore = 'local', |

|||

optimizer = 'sgd', |

|||

optimizer_params = (('learning_rate', 0.01), |

|||

force_init = False |

|||

| ) |

Install and initialize optimizers.

Parameters

----------

kvstore : str or KVStore

Default `'local'`.

optimizer : str or Optimizer

Default `'sgd'`

optimizer_params : dict

Default `(('learning_rate', 0.01),)`. The default value is not a dictionary,

just to avoid pylint warning of dangerous default values.

force_init : bool

Default `False`, indicating whether we should force re-initializing the

optimizer in the case an optimizer is already installed.

| def core.module.Module.init_params | ( | self, | |

initializer = Uniform(0.01), |

|||

arg_params = None, |

|||

aux_params = None, |

|||

allow_missing = False, |

|||

force_init = False, |

|||

allow_extra = False |

|||

| ) |

Initialize the parameters and auxiliary states.

Parameters

----------

initializer : Initializer

Called to initialize parameters if needed.

arg_params : dict

If not None, should be a dictionary of existing arg_params. Initialization

will be copied from that.

aux_params : dict

If not None, should be a dictionary of existing aux_params. Initialization

will be copied from that.

allow_missing : bool

If true, params could contain missing values, and the initializer will be

called to fill those missing params.

force_init : bool

If true, will force re-initialize even if already initialized.

| def core.module.Module.install_monitor | ( | self, | |

| mon | |||

| ) |

| def core.module.Module.label_names | ( | self | ) |

| def core.module.Module.label_shapes | ( | self | ) |

| def core.module.Module.load | ( | prefix, | |

| epoch, | |||

load_optimizer_states = False, |

|||

| kwargs | |||

| ) | [static] |

Create a model from previously saved checkpoint.

Parameters

----------

prefix : str

path prefix of saved model files. You should have

"prefix-symbol.json", "prefix-xxxx.params", and

optionally "prefix-xxxx.states", where xxxx is the

epoch number.

epoch : int

epoch to load.

load_optimizer_states : bool

whether to load optimizer states. Checkpoint needs

to have been made with save_optimizer_states=True.

data_names : list of str

Default is `('data')` for a typical model used in image classification.

label_names : list of str

Default is `('softmax_label')` for a typical model used in image

classification.

logger : Logger

Default is `logging`.

context : Context or list of Context

Default is `cpu()`.

work_load_list : list of number

Default `None`, indicating uniform workload.

fixed_param_names: list of str

Default `None`, indicating no network parameters are fixed.

| def core.module.Module.load_optimizer_states | ( | self, | |

| fname | |||

| ) |

| def core.module.Module.output_names | ( | self | ) |

| def core.module.Module.output_shapes | ( | self | ) |

| def core.module.Module.reshape | ( | self, | |

| data_shapes, | |||

label_shapes = None |

|||

| ) |

| def core.module.Module.save_checkpoint | ( | self, | |

| prefix, | |||

| epoch, | |||

save_optimizer_states = False |

|||

| ) |

Save current progress to checkpoint.

Use mx.callback.module_checkpoint as epoch_end_callback to save during training.

Parameters

----------

prefix : str

The file prefix to checkpoint to

epoch : int

The current epoch number

save_optimizer_states : bool

Whether to save optimizer states for continue training

| def core.module.Module.save_optimizer_states | ( | self, | |

| fname | |||

| ) |

| def core.module.Module.set_params | ( | self, | |

| arg_params, | |||

| aux_params, | |||

allow_missing = False, |

|||

force_init = True |

|||

| ) |

Assign parameter and aux state values.

Parameters

----------

arg_params : dict

Dictionary of name to value (`NDArray`) mapping.

aux_params : dict

Dictionary of name to value (`NDArray`) mapping.

allow_missing : bool

If true, params could contain missing values, and the initializer will be

called to fill those missing params.

force_init : bool

If true, will force re-initialize even if already initialized.

Examples

--------

An example of setting module parameters::

>>> sym, arg_params, aux_params = \

>>> mx.model.load_checkpoint(model_prefix, n_epoch_load)

>>> mod.set_params(arg_params=arg_params, aux_params=aux_params)

| def core.module.Module.set_states | ( | self, | |

states = None, |

|||

value = None |

|||

| ) |

Set value for states. Only one of states & value can be specified.

Parameters

----------

states : list of list of NDArrays

source states arrays formatted like [[state1_dev1, state1_dev2],

[state2_dev1, state2_dev2]].

value : number

a single scalar value for all state arrays.

| def core.module.Module.update | ( | self | ) |

| def core.module.Module.update_metric | ( | self, | |

| eval_metric, | |||

| labels | |||

| ) |

Member Data Documentation

core::module.Module::_arg_params [private] |

core::module.Module::_aux_names [private] |

core::module.Module::_aux_params [private] |

core::module.Module::_context [private] |

core::module.Module::_data_names [private] |

core::module.Module::_data_shapes [private] |

core::module.Module::_exec_group [private] |

core::module.Module::_fixed_param_names [private] |

core::module.Module::_grad_req [private] |

core::module.Module::_kvstore [private] |

core::module.Module::_label_names [private] |

core::module.Module::_label_shapes [private] |

core::module.Module::_optimizer [private] |

core::module.Module::_output_names [private] |

core::module.Module::_param_names [private] |

core::module.Module::_params_dirty [private] |

core::module.Module::_preload_opt_states [private] |

core::module.Module::_state_names [private] |

core::module.Module::_symbol [private] |

core::module.Module::_update_on_kvstore [private] |

core::module.Module::_updater [private] |

core::module.Module::_work_load_list [private] |

The documentation for this class was generated from the following file: